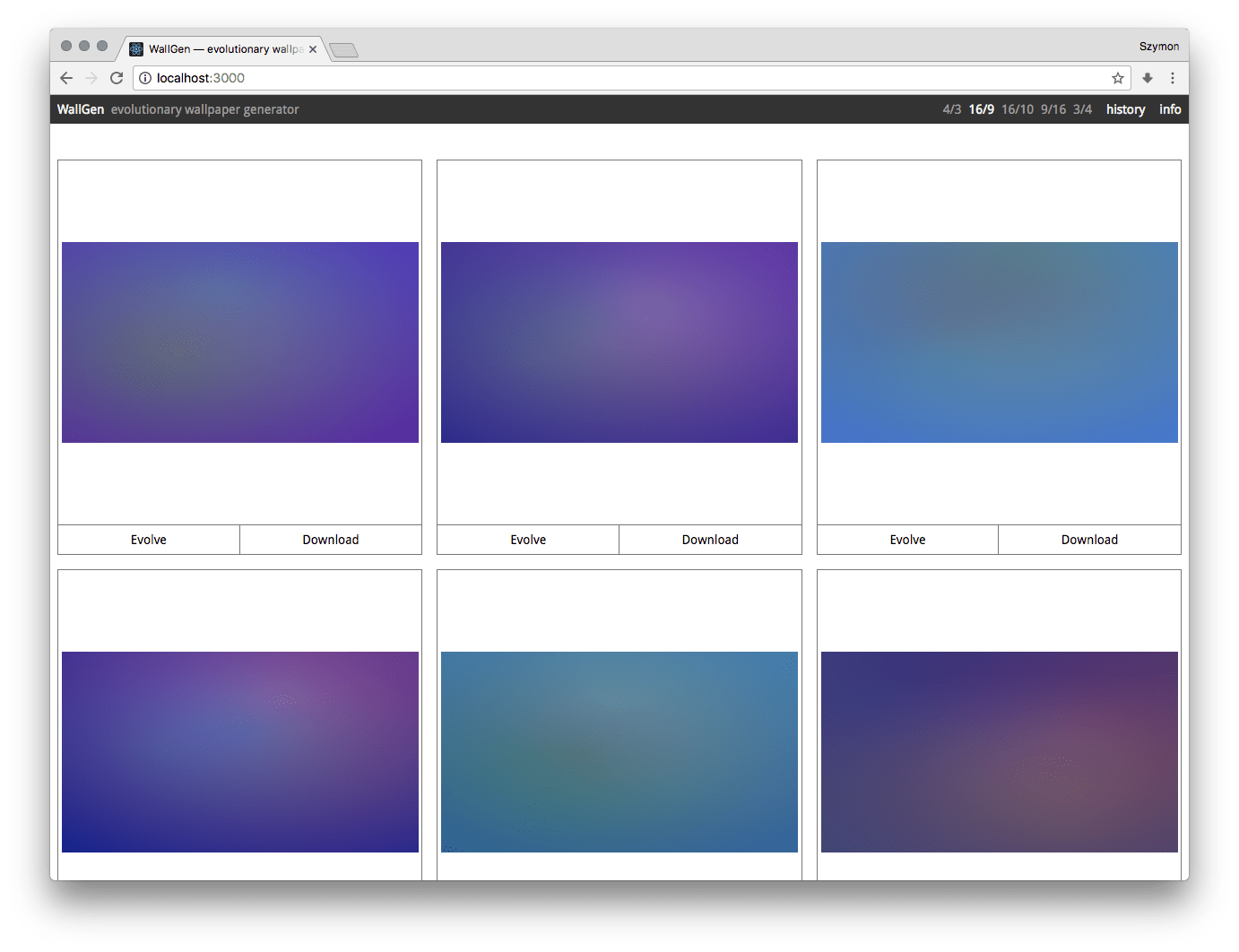

Wallgen is an evolutionary wallpaper generator using genetic algorithm to create never ending list of abstract ambient wallpapers. It was built during my 1-project-a-month meta-project in February 2017.

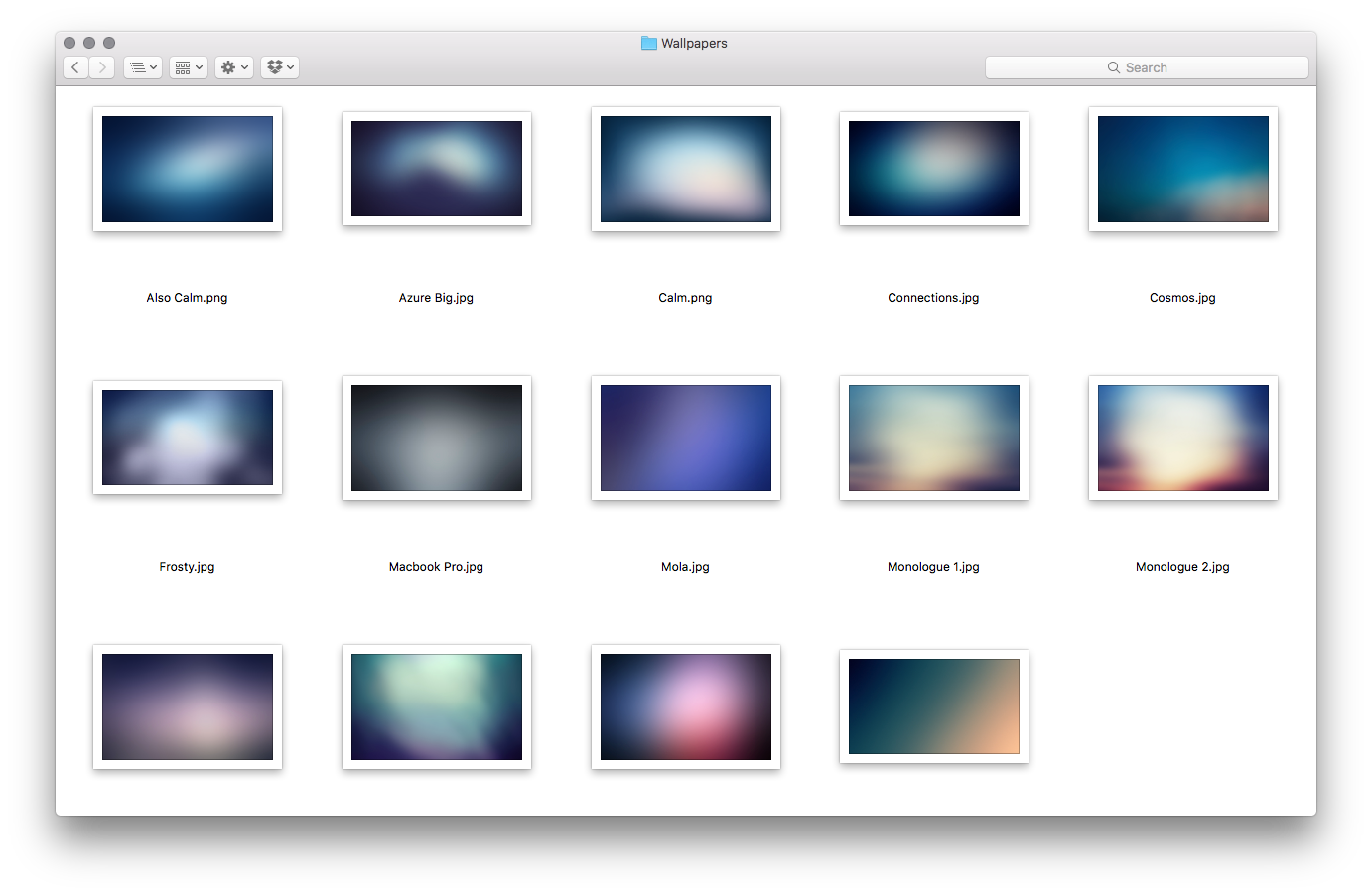

My favourite desktop wallpapers are calming blurry colors, but it's hard to find them and I lack enough Photoshop skills to make something I enjoy looking at.

My solution to this "problem" was to try to generate them with code, ideally in a way that I could potentially get an endless stream of nice soothing abstract blurs.

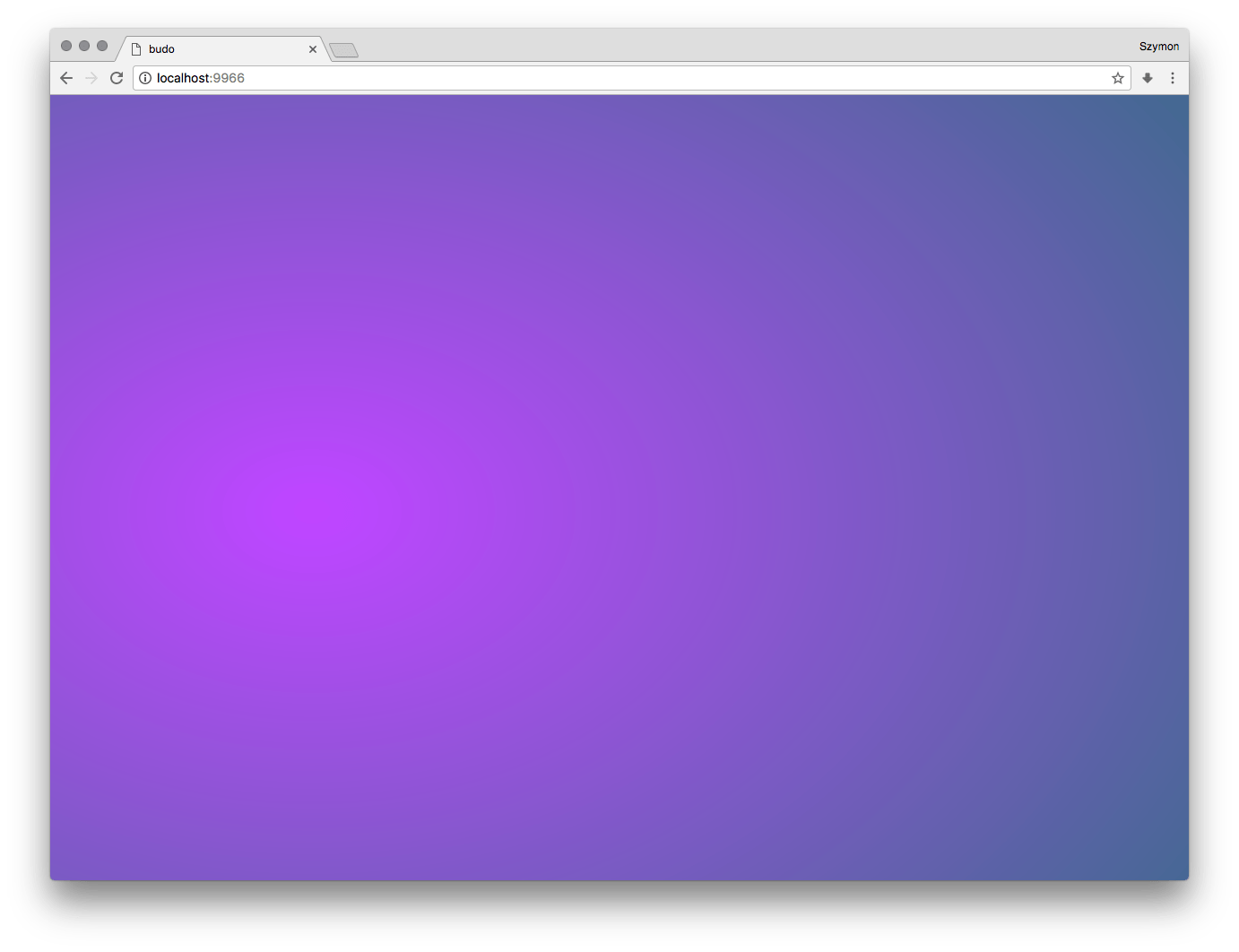

The first step was to actually get something on a screen, and to get nice and performant fullscreen gradients, I jumped straight to GLSL. It would be possible to use CSS gradients for example, but for anything more complex than linear/radial gradient it would get tough.

I thought of these kinds of blurs as a set of points which emanate colours. Combining multiple points with nice color palette and proper placing together could create images similar to what I was looking for.

I got to what seemed like a good approach using distance and mix in GLSL.

Most basic gradient looked like this:

Rendered with this small piece of code:

precision mediump float;

uniform vec2 iResolution;

void main() {

vec3 color1 = vec3(0.75, 0.31, 1.0);

vec3 color2 = vec3(0.232, 0.42, 0.529);

vec2 gradientPoint = vec2(0.23, 0.47);

vec2 pos = gl_FragCoord.xy / iResolution.xy;

float dist = distance(pos, gradientPoint);

gl_FragColor = vec4(mix(color1, color2, dist), 1.0);

}Getting one shader to work was the easy part, but I wanted endless, similar, but not exactly the same wallpapers.

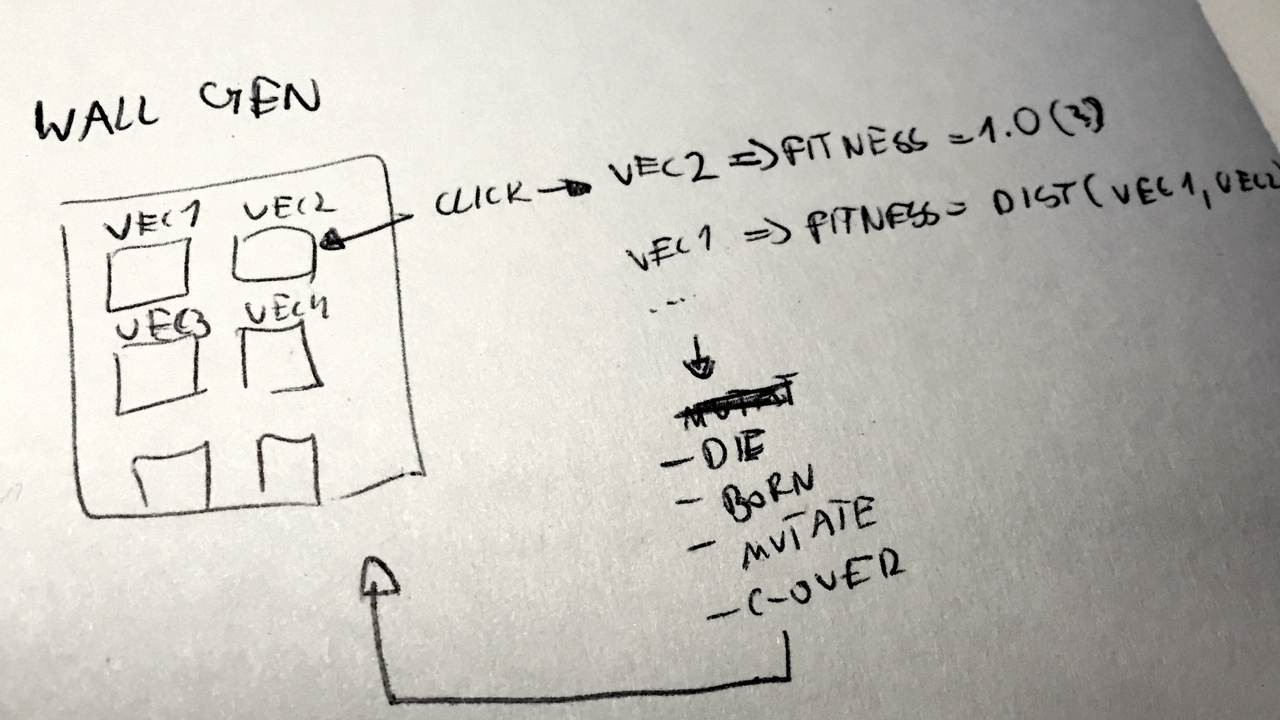

I started toying with this idea few months back, first approaching this as a k-NN problem, with a logic resembling this one:

- generate a lot of random wallpapers

- allow user to select a set of "target wallpapers"

- keep coming up with random wallpapers until they are close enough (k-NN) to the set of target wallpapers

This has worked awfully — basically, I had been trying to keep coming up with random values until I found a subset close enough to what I like.

The next step was to try to reverse this process — to only come up with random values near the "target" set. An evolutionary algorithm seemed like an obvious solution — starting with random population of wallpapers and steering the process toward user-selected goal.

Daniel Shiffman has a great explanation of genetic algorithms in his "Nature of Code" book: natureofcode.com/book/chapter-9-the-evolution-of-code/ so I'm not going to go into the details here.

The most challenging problem in interactive evolution is coming up with a nice balance between number of choices presented to the user and calculations based around fitness function. I didn't want to ask user to rate each of possibly thousands of generated wallpapers, and I came up with a simple solution — the user selects just one wallpaper to "evolve", and fitness of the rest of the wallpapers is calculated as distance from user selected one. Wallpaper DNA is represented as an array of normalized floating point values, so calculating distances between these arrays is easy, although far away from what happens in nature — I'm not even comparing phenotypes, and two arrays which are very similar in DNA could potentially yield very unalike images. But it was good enough for this experiment, and I couldn't come up with any other ideas anyways.

I try to follow simple set of rules when coding: make it work, make it right, make it fast.

After getting to the point where my generated images started to look similar but different to the previous choices, and after making the code readable and functional (everything operating on immutable arrays and returning new immutable arrays) I ended up in a situation where bigger populations could evolve for few seconds, and since JavaScript is single-threaded it would block the browser. I had been trying to optimize the code for an afternoon, but couldn't speed it up by a factor of 10x, so I decided to move the calculations to webworker — basically a separate thread to execute JavaScript in, which can communicate asynchronously with the main rendering thread.

Since I used Immutable.js for handling the data, I would have to first convert them to plain JavaScript values, then into JSON and do the opposite in webworker, and then after calculations are done do it again in another direction. Stringifying with JSON can get quite slow, and since I could potentially use thousands of arrays, each with hundreds of floating point values, I was getting worried of potential bottleneck. Luckily I stumbled upon transit-immutable-js which can turn immutable structures into transit strings directly.

Still, after doing some tests, running evolution in webworker is slower than running the same code on a main thread (consistently by few percent). I suspect it's because of an overhead of transit-ing and transmitting data, but it gives us an illusion of things happening much smoother, which is worth few extra milliseconds.

After each evolution step, the whole population is replaced with a new one. I wanted to make this easy on the eyes, and since each genotype is an array of numbers (with constant length) it was easy to "morph" them into each other.

I render GLSL with amazing gl-react so I just had to re-calculate that genotype array to get my transitions.

I wanted to make this automatic as well, and I ended up with a simple loop function powered by requestAnimationFrame (I'm using raf here) — every time the phenotype component receives new genotype data it starts the update loop, which goes on until current rendered values equal the target ones.

Thanks to Immutable.js comparing two arrays for equality requires just .is(), and is very fast.

Below is a relevant part of phenotype component class, whole file is on github: phenotype.js.

updateLoop() {

const eps = 0.01;

const k = 0.9;

// move towards target code

const code = this.state.code.map((v, i) => {

const dist = abs(v - this.props.code.get(i));

return dist < eps ? this.props.code.get(i) : k * v + (1 - k) * this.props.code.get(i);

});

this.setState({ code });

if (!code.is(this.props.code)) {

// keep updating if current code is different from target one

this.rafHandle = raf(this.updateLoop);

}

else {

raf.cancel(this.rafHandle);

this.rafHandle = undefined;

}

}

componentWillReceiveProps(nextProps) {

if (!nextProps.code.is(this.state.code) && !this.rafHandle) {

// start animating if target differs from current code

this.rafHandle = raf(this.updateLoop);

}

}This project was simpler than January's SDF-UI, and took 6 hours less to make (totaling in almost 24 hours of development time). There was a lot to figure out, and I ended up learning about webworkers, and implementing my first evolutionary algorithm after I finished college.

Like the last time, there's a lot that could be improved, but March is almost there, and I'm going to move on to something else.

You can play with live version here: http://szymonkaliski.github.io/wallgen.

Code is open sourced and available with MIT license here: szymonkaliski/wallgen.

You can find more details in this blog post: building wallgen.